The Humans Behind AI’s Curtain

- Ian Mark Ganut

- Jun 8

- 5 min read

The $15 Billion Shadow Workforce Powering Silicon Valley’s Brightest Dreams

Every time you chat with an AI, somewhere, a human taught it what to say.

Every time an AI filters out hate speech or spots a tumor, a human shows it what to look for.

Every "automated" system we celebrate today is built on millions of hours of unseen human labor, much of it cheap, much of it brutal.

The AI revolution is not what it seems.

This spring, at an artificial intelligence conference in San Francisco, a prominent tech executive let something slip that drew a nervous laugh from the room:

"Everyone talks about AI replacing humans. The truth is, there are more humans working on AI than ever, they’re just invisible."

That line stuck with me. The more I investigated, the more unsettling the picture became: Behind the bright, polished demos of generative chatbots, self-driving cars, and diagnostic AI tools lies a sprawling shadow industry, a digital sweatshop staffed by millions of underpaid workers training machines to behave as if they don’t need us anymore.

It’s a $15 billion global economy built on invisible labor. And with new European Union AI regulations set to take effect next month, its days in the shadows may be numbered.

The Illusion of Automation

Next week, Silicon Valley’s CEOs will take the stage on earnings calls to tout their latest AI breakthroughs. We’ll hear about massive language models with near-human fluency, robots that can navigate the real world, and AI tools that promise to eliminate entire categories of human work.

What we won’t hear is how much of this future still runs on painfully human labor.

I’ve spent the past month tracking this hidden workforce, from AI trainers in Nigeria and Kenya to a growing domestic pipeline in the U.S. South. I’ve reviewed leaked internal documents, interviewed whistleblowers, and embedded with workers whose jobs are far more grueling than the marketing gloss suggests.

What I found is a system that relies on the very labor it claims to eliminate, and one that may not be sustainable for much longer.

Building the Machine

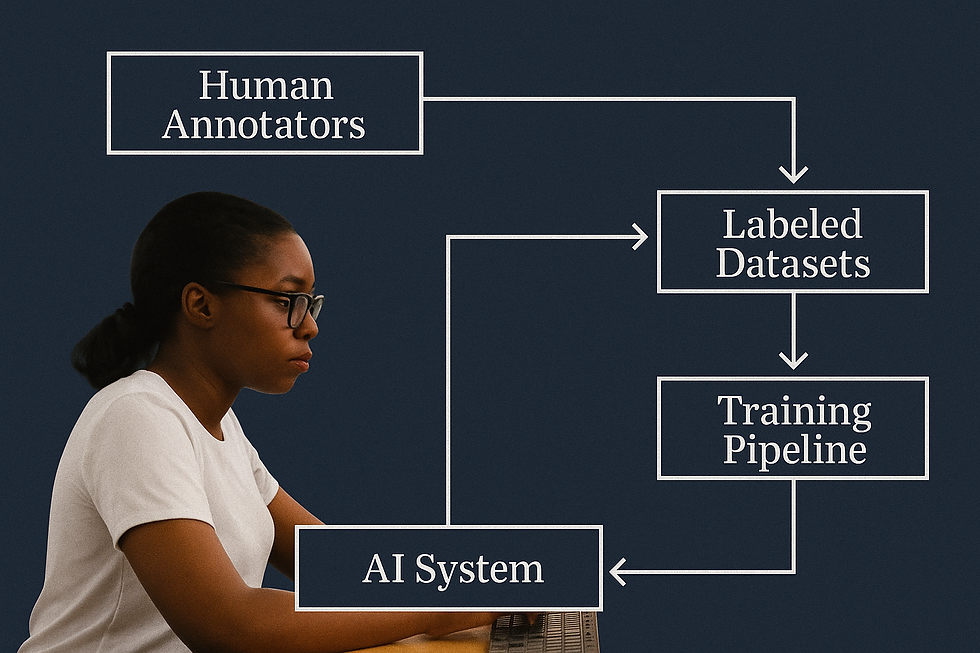

Every AI system that interacts with the world, whether it’s a chatbot, a content filter, or a self-driving car, requires vast amounts of labeled training data.

Someone has to show the machine what a stop sign looks like in bad lighting, what constitutes hate speech across dozens of cultural contexts, how to distinguish between sarcasm and sincerity, or whether an X-ray contains cancer.

These tasks are performed by a largely invisible class of workers: freelance annotators, data labelers, and content moderators spread across the globe.

The pay can be shockingly low. In parts of East Africa, annotators report earning as little as $1.50 per hour for painstaking work. Even in the U.S., I spoke with Sarah, a former public school teacher in Little Rock, Arkansas, who now spends 10 hours a day reviewing violent and sexually explicit material so that AI systems can “understand” what to block.

"It’s like being a digital janitor for the internet," she told me. "Except you never get to leave the basement."

Sarah earns $15 an hour with no benefits. She says many colleagues are former teachers, lured by job ads promising flexible online work, only to find themselves performing a kind of psychological triage for tech giants.

"Some of the content is so disturbing that people quit after a week," she said. "But the platforms always have more workers lined up."

A Mountain of Human Work

One former OpenAI data scientist, who spoke on condition of anonymity, put it bluntly:

"GPT-4 was built on a mountain of invisible human work, and we were pushed to ignore how much of it was exploitative."

That mountain is enormous.

An unpublished study by an AI economist I reviewed estimates that GPT-4 required roughly 50 million hours of human labeling to reach its current capabilities. Similar levels of labor power every major AI product on the market today.

Leaked internal Slack messages from one major AI company reveal debates over whether to pay global annotators in cryptocurrency to circumvent local labor laws, a strategy that would allow firms to avoid employee classification and skirt minimum wage protections.

"It was all about scale," said the former data scientist. "The more data we could label cheaply, the faster we could ship new models. Ethical concerns were a luxury."

A System Under Strain

This system of invisible labor has been able to scale in large part because it operates in legal and ethical gray zones.

Many annotators work for subcontractors or crowdworking platforms that act as intermediaries, shielding major tech firms from liability or scrutiny. Workers often have no direct relationship with the companies whose products they’re helping to build.

But that may soon change.

The European Union’s new AI Act, set to take effect next month, will impose stringent transparency and data governance requirements on AI systems, including clear disclosure of the human labor involved in training them.

"There’s an entire shadow supply chain behind these systems that regulators haven’t touched, until now," said one EU policy advisor involved in drafting the law. "The AI Act could force companies to reveal how much human labor goes into these so-called autonomous products."

Industry insiders are bracing for impact.

"It will be the GDPR moment for AI," one AI company executive told me privately. "The public is going to realize that the future they were sold still depends on millions of poorly paid humans doing invisible work."

The Oldest Fuel

The narrative of artificial intelligence is one of frictionless automation, a future where machines do the work and humans move on to higher pursuits.

But for now, the opposite is true. The most advanced AI systems in the world still depend on millions of hours of human toil, labeling, moderating, correcting, and cleaning the data that machines consume.

As one annotator in Nairobi told me: "Without us, these AI systems would be blind, deaf, and dumb."

The AI boom is real. But it is not purely technological. It is powered by the oldest fuel of all, cheap human labor.

And as regulators begin to peel back the curtain, we may soon be forced to reckon with the true costs of our automated future.

I just hope big companies could read this.

Another example of why ethical AI matters and why we aren’t there yet.

Should be required reading for every tech investor.

Shocking but not surprising. Tech industry built on cheap labor, again.

This is why 'AI replacing jobs' is so misleading. It creates different kinds of exploitation.